DISCO

Sensitive information in image classification

Recent deep learning models have shown remarkable performance in image classification. While these deep learning systems are getting closer to practical deployment, the common assumption made about data is that it does not carry any sensitive information. This assumption may not hold for many practical cases, especially in the domain where an individual’s personal information is involved, like healthcare and facial recognition systems.

Data-driven pruning filter

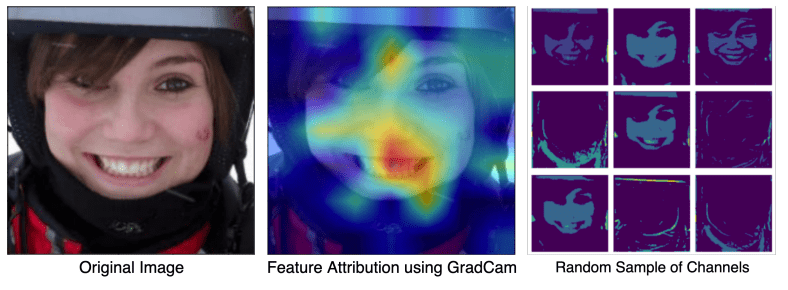

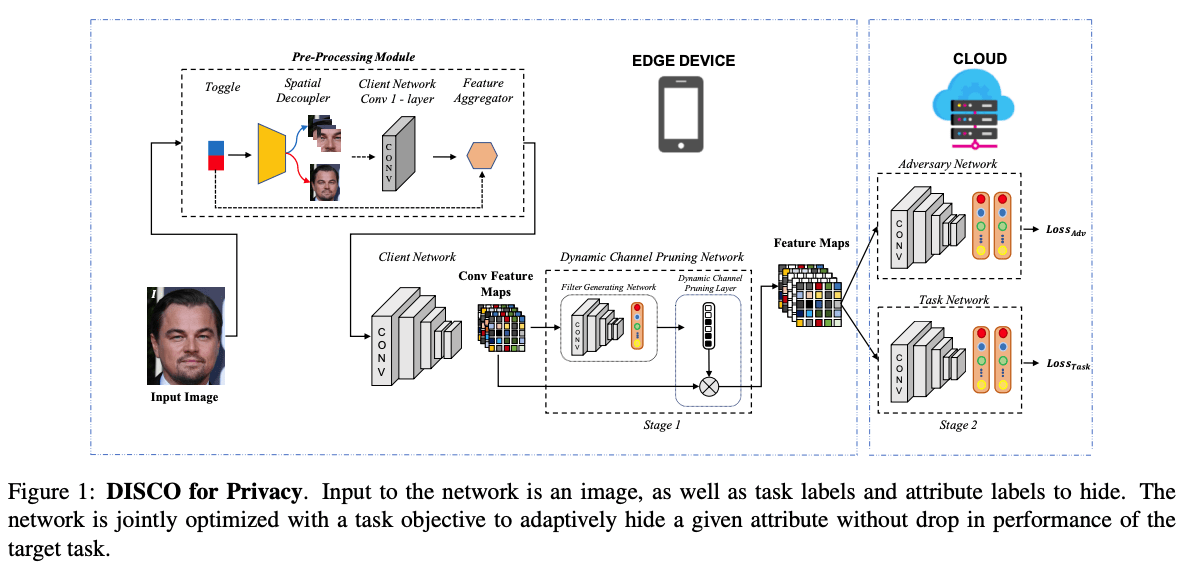

We posit that selectively removing features in this latent space can protect the sensitive information and provide better privacy-utility trade-off. Consequently, we propose DISCO which learns a dynamic and data driven pruning filter to selectively obfuscate sensitive information in the feature space. We propose diverse attack schemes for sensitive inputs & attributes and demonstrate the effectiveness of DISCO against state-of-the-art methods through quantitative and qualitative evaluation. Finally, we also release an evaluation benchmark dataset of 1 million sensitive representations to encourage rigorous exploration of novel attack and defense schemes

Better privacy-utility trade off

More Info

We introduce DISCO, a dynamic scheme for obfuscation of sensitive channels to protect sensitive information in collaborative inference. DISCO provides a steerable and transferable privacy-utility trade-off at inference.

Diverse attack schemes

More Info

We propose diverse attack schemes for sensitive inputs and attributes and achieve significant performance gain over existing state-of-the-art methods across multiple datasets.

Benchmark dataset

More Info

To encourage rigorous exploration of attack schemes for private collaborative inference, we release a benchmark dataset of 1 million sensitive representations.

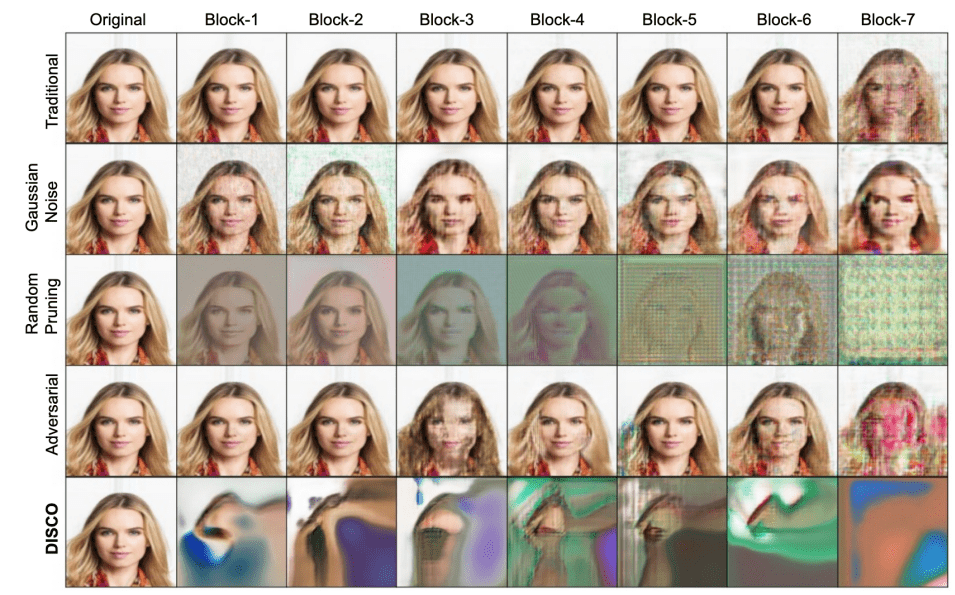

Reconstruction results on CelebA: All of the reconstructed images are obtained from the activations using the likelihood maximization attack. We generate activations from the ResNet-18 architecture where a set of convolution, batch normalization, and activation layers are grouped under a block. The first column shows the original sensitive input and remaining columns show its reconstruction across different blocks. For gaussian noise we use µ = −1., σ = 400, this is the amount of noise at which the learning network gets utility close down to random chance. Adversarial refers to the set of techniques for filtering sensitive information using adversarial learning. For DISCO and Random Pruning we use a pruning ratio of R = 0.6.

People

Abhishek Singh

Ayush Chopra

Ethan Garza

Emily Zhang

Praneeth Vepakomma

Vivek Sharma

Ramesh Raskar